Model Adjacent

How to think about products in the models era

In an era of human work and cognition, we had software & tooling built to accelerate human capabilities around cognition, communication & actions. In the models era where model performance ranges from savant to idiots, we see a new kind of product development in the models era.

The model-adjacent products - Model Adjacent Products (MAPs) represent a step forward in the models era, going beyond isolated large language models (LLMs) toward alive ecosystems where models interact dynamically with external tools, data sources, and showcase specialized capabilities. We see this as products that methodically remove limitation of LLMs as static knowledge repositories with fixed context windows, converting them to continual learning agents capable of true autonomy.

While base model continue their march on the reasoning and capability frontiers we are seeing new products around them - from assistants, ****to model-native tools, to skills, verification systems, simulators, and adaptation techniques that extend this autonomy frontier for models.

This includes everything from API based connectors to reinforcement learning frameworks that provide objective feedback on model performance. As we move from conversational interfaces to agentic workflows, these adjacent components become increasingly essential for handling complex, multi-step tasks in live real world environments where reliability, cost efficiency, and data privacy are front and center.

This includes everything from API based connectors to reinforcement learning frameworks that provide objective feedback on model performance. As we move from conversational interfaces to agentic workflows, these adjacent components become increasingly essential for handling complex, multi-step tasks in live real world environments where reliability, cost efficiency, and data privacy are front and center.

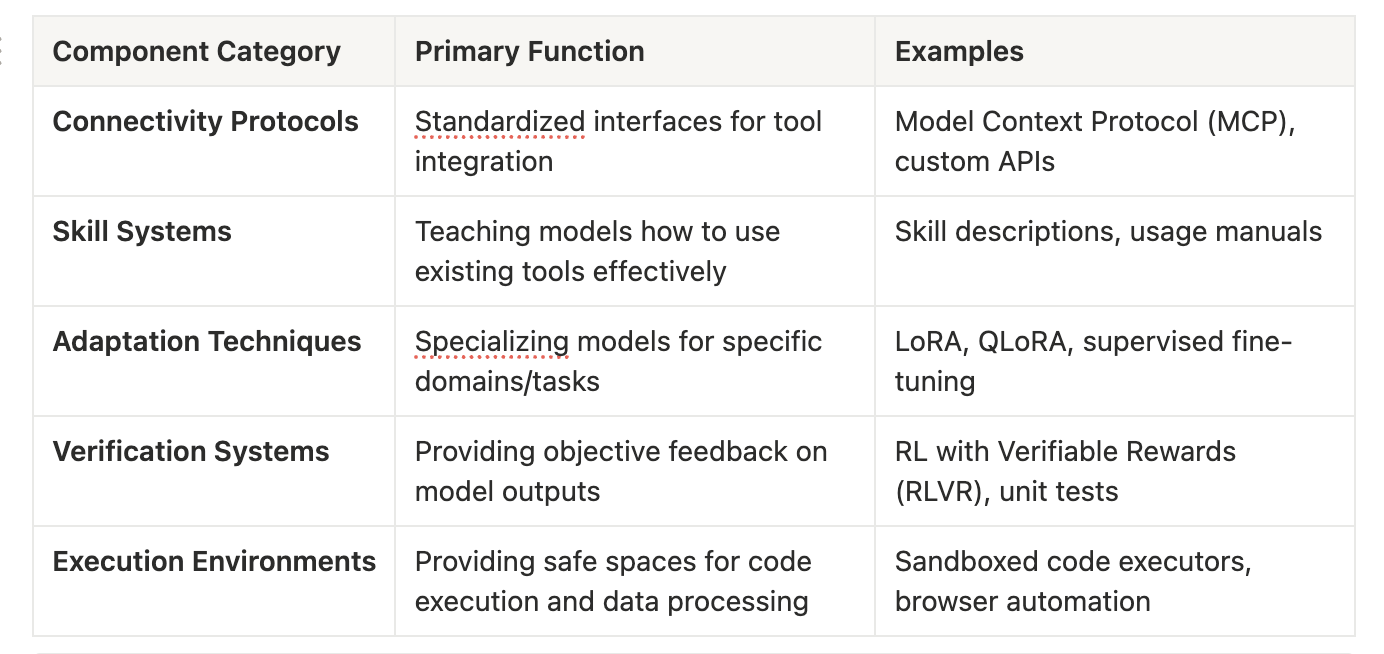

Table: Key Components of Model Adjacent Ecosystems

Much like consumer first tools needed designers with an intrinsic understanding of human behaviour, understanding and capabilities (thumbs anyone) - model-adjacent products will be built with a native understanding of the model capabilities, reasoning and flaws (hallucinations). These include understanding of capabilities like reward functions, token windows, hallucination controls, verifiers, context and memory tooling.

Engineering for model-adjacent will require a different expectation from researchers, engineers and product managers who can work together to demystify capabilities, understand the constraints and adapt behaviour for model autonomy.

2026’s gonna be fun